Truly effortless Bayesian Deep Learning in Julia

Deep learning has dominated AI research in recent years — but how much promise does it really hold? That is very much an ongoing and increasingly polarising debate that you can follow live on Twitter. On one side you have optimists like Ilya Sutskever, chief scientist of OpenAI, who believes that large deep neural networks may already be slightly conscious — that’s “may” and “slightly” and only if you just go deep enough? On the other side you have prominent skeptics like Judea Pearl who has long since argued that deep learning still boils down to curve fitting — purely associational and not even remotely intelligent (Pearl and Mackenzie 2018).

The case for Bayesian Deep Learning

Whatever side of this entertaining twitter dispute you find yourself on, the reality is that deep-learning systems have already been deployed at large scale both in academia and industry. More pressing debates therefore revolve around the trustworthiness of these existing systems. How robust are they and in what way exactly do they arrive at decisions that affect each and every one of us? Robustifying deep neural networks generally involves some form of adversarial training, which is costly, can hurt generalization (Raghunathan et al. 2019) and does ultimately not guarantee stability (Bastounis, Hansen, and Vlačić 2021). With respect to interpretability, surrogate explainers like LIME and SHAP are among the most popular tools, but they too have been shown to lack robustness (Slack et al. 2020).

Exactly why are deep neural networks unstable and in-transparent? The first thing to note is that the number of free parameters is typically huge (if you ask Mr Sutskever it really probably cannot be huge enough!). That alone makes it very hard to monitor and interpret the inner workings of deep-learning algorithms. Perhaps more importantly though, the number of parameters relative to the size of the data is generally huge:

[…] deep neural networks are typically very underspecified by the available data, and […] parameters [therefore] correspond to a diverse variety of compelling explanations for the data. (Wilson 2020)

In other words, training a single deep neural network may (and usually does) lead to one random parameter specification that fits the underlying data very well. But in all likelihood there are many other specifications that also fit the data very well. This is both a strength and vulnerability of deep learning: it is a strength because it typically allows us to find one such “compelling explanation” for the data with ease through stochastic optimization; it is a vulnerability because one has to wonder:

How compelling is an explanation really if it competes with many other equally compelling, but potentially very different explanations?

A scenario like this very much calls for treating predictions from deep learning models probabilistically (Wilson 2020). Formally, we are interested in estimating the posterior predictive distribution as the following Bayesian model average (BMA):

The integral implies that we essentially need many predictions from many different specifications of the model. Unfortunately, this means more work for us or rather our computers. Fortunately though, researchers have proposed many ingenious ways to approximate the equation above in recent years: Gal and Ghahramani (2016) propose using dropout at test time while Lakshminarayanan et al. (2016) show that averaging over an ensemble of just five models seems to do the trick. Still, despite their simplicity and usefulness these approaches involve additional computational costs compared to training just a single network. As we shall see now though, another promising approach has recently entered the limelight: Laplace approximation (LA).

If you have read my previous post on Bayesian Logistic Regression, then the term Laplace should already sound familiar to you. As a matter of fact, we will see that all concepts covered in that previous post can be naturally extended to deep learning. While some of these concepts will be revisited below, I strongly recommend you check out the previous post before reading on here. Without further ado let us now see how LA can be used for truly effortless deep learning.

Laplace Approximation

While LA was first proposed in the 18th century, it has so far not attracted serious attention from the deep learning community largely because it involves a possibly large Hessian computation. Daxberger et al. (2021) are on a mission to change the perception that LA has no use in DL: in their NeurIPS 2021 paper they demonstrate empirically that LA can be used to produce Bayesian model averages that are at least at par with existing approaches in terms of uncertainty quantification and out-of-distribution detection and significantly cheaper to compute. They show that recent advancements in autodifferentation can be leveraged to produce fast and accurate approximations of the Hessian and even provide a fully-fledged Python library that can be used with any pretrained Torch model. For this post, I have built a much less comprehensive, pure-play equivalent of their package in Julia — BayesLaplace.jl can be used with deep learning models built in Flux.jl, which is Julia’s main DL library. As in the previous post on Bayesian logistic regression I will rely on Julia code snippits instead of equations to convey the underlying maths. If you’re curious about the maths, the NeurIPS 2021 paper provides all the detail you need. You will also find a slightly more detailed version of this article on my blog.

From Bayesian Logistic Regression …

Let’s recap: in the case of logistic regression we had assumed a zero-mean Gaussian prior for the weights that are used to compute logits, which in turn are fed to a sigmoid function to produce probabilities. We saw that under this assumption solving the logistic regression problem corresponds to minimizing the following differentiable loss function:

As our first step towards Bayesian deep learning, we observe the following: the loss function above corresponds to the objective faced by a single-layer artificial neural network with sigmoid activation and weight decay. In other words, regularized logistic regression is equivalent to a very simple neural network architecture and hence it is not surprising that underlying concepts can in theory be applied in much the same way.

So let’s quickly recap the next core concept: LA relies on the fact that the second-order Taylor expansion of our loss function evaluated at the maximum a posteriori (MAP) estimate amounts to a multi-variate Gaussian distribution. In particular, that Gaussian is centered around the MAP estimate with covariance equal to the inverse Hessian evaluated at the mode (Murphy 2022).

That is basically all there is to the story: if we have a good estimate of the Hessian we have an analytical expression for an (approximate) posterior over parameters. So let’s go ahead and implement this approach in Julia using BayesLaplace.jl. The code below generates some toy data, builds and trains a single-layer neural network and finally fits a post-hoc Laplace approximation:

# Import libraries.

using Flux, Plots, Random, PlotThemes, Statistics, BayesLaplace

theme(:wong)

# Toy data:

xs, y = toy_data_linear(100)

X = hcat(xs...); # bring into tabular format

data = zip(xs,y)

# Neural network:

nn = Chain(Dense(2,1))

λ = 0.5

sqnorm(x) = sum(abs2, x)

weight_regularization(λ=λ) = 1/2 * λ^2 * sum(sqnorm, Flux.params(nn))

loss(x, y) = Flux.Losses.logitbinarycrossentropy(nn(x), y) + weight_regularization()

# Training:

using Flux.Optimise: update!, ADAM

opt = ADAM()

epochs = 50

for epoch = 1:epochs

for d in data

gs = gradient(params(nn)) do

l = loss(d...)

end

update!(opt, params(nn), gs)

end

end

# Laplace approximation:

la = laplace(nn, λ=λ)

fit!(la, data)

p_plugin = plot_contour(X',y,la;title="Plugin",type=:plugin);

p_laplace = plot_contour(X',y,la;title="Laplace")

# Plot the posterior distribution with a contour plot.

plot(p_plugin, p_laplace, layout=(1,2), size=(1000,400))

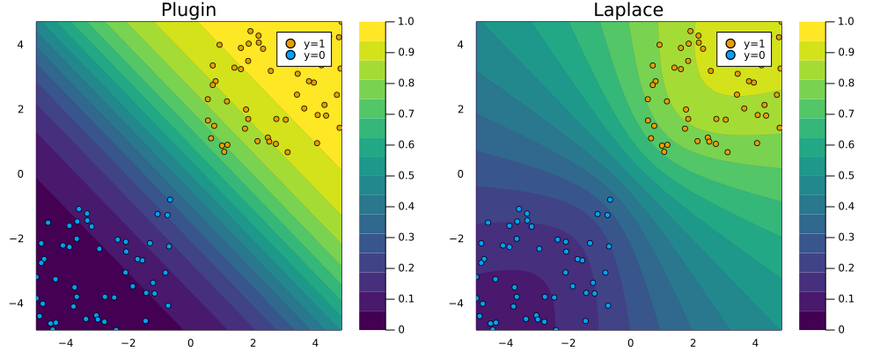

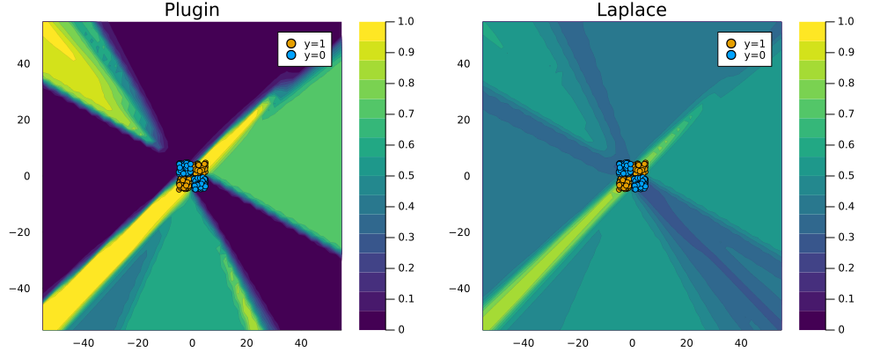

The resulting plot below visualizes the posterior predictive distribution in the 2D feature space. For comparison I have added the corresponding plugin estimate as well. Note how for the Laplace approximation the predicted probabilities fan out indicating that confidence decreases in regions scarce of data.

Figure 1: Posterior predictive distribution of Logistic regression in the 2D feature space using plugin estimator (left) and Laplace approximation (right). Image by author.

… to Bayesian Neural Networks

Now let’s step it up a notch: we will repeat the exercise from above, but this time for data that is not linearly separable using a simple MLP instead of the single-layer neural network we used above. The code below is almost the same as above:

# Import libraries.

using Flux, Plots, Random, PlotThemes, Statistics, BayesLaplace

theme(:wong)

# Toy data:

xs, y = toy_data_linear(100)

X = hcat(xs...); # bring into tabular format

data = zip(xs,y)

# Build MLP:

n_hidden = 32

D = size(X)[1]

nn = Chain(

Dense(D, n_hidden, σ),

Dense(n_hidden, 1)

)

λ = 0.01

sqnorm(x) = sum(abs2, x)

weight_regularization(λ=λ) = 1/2 * λ^2 * sum(sqnorm, Flux.params(nn))

loss(x, y) = Flux.Losses.logitbinarycrossentropy(nn(x), y) + weight_regularization()

# Training:

using Flux.Optimise: update!, ADAM

opt = ADAM()

epochs = 200

for epoch = 1:epochs

for d in data

gs = gradient(params(nn)) do

l = loss(d...)

end

update!(opt, params(nn), gs)

end

end

# Laplace approximation:

la = laplace(nn, λ=λ)

fit!(la, data)

p_plugin = plot_contour(X',y,la;title="Plugin",type=:plugin);

p_laplace = plot_contour(X',y,la;title="Laplace")

# Plot the posterior distribution with a contour plot.

plot(p_plugin, p_laplace, layout=(1,2), size=(1000,400))

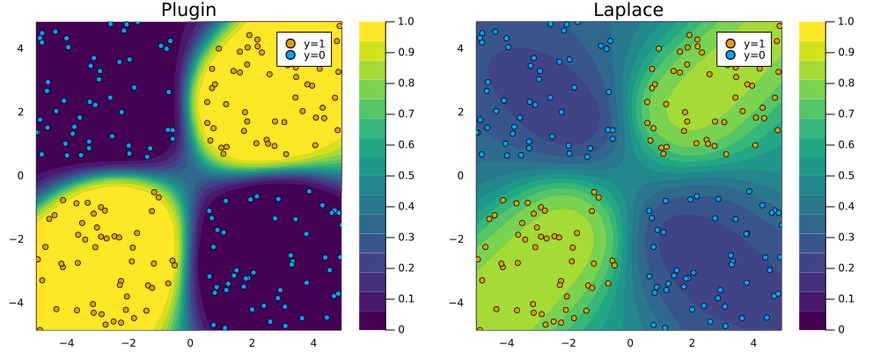

Figure 2 demonstrates that once again the Laplace approximation yields a posterior predictive distribution that is more conservative than the over-confident plugin estimate.

Figure 2: Posterior predictive distribution of MLP in the 2D feature space using plugin estimator (left) and Laplace approximation (right). Image by author.

To see why this is a desirable outcome consider the zoomed out version of Figure 2 below: the plugin estimator classifies with full confidence in regions completely scarce of any data. Arguably Laplace approximation produces a much more reasonable picture, even though it too could likely be improved by fine-tuning our prior and the neural network architecture.

Figure 3: Posterior predictive distribution of MLP in the 2D feature space using plugin estimator (left) and Laplace approximation (right). Zoomed out. Image by author.

Wrapping up

Recent state-of-the-art research on neural information processing suggests that Bayesian deep learning can be effortless: Laplace approximation for deep neural networks appears to work very well and it does so at minimal computational cost (Daxberger et al. 2021). This is great news, because the case for turning Bayesian is strong: society increasingly relies on complex automated decision-making systems that need to be trustworthy. More and more of these systems involve deep learning, which in and of itself is not trustworthy. We have seen that typically there exist various viable parameterizations of deep neural networks each with their own distinct and compelling explanation for the data at hand. When faced with many viable options, don’t put all of your eggs in one basket. In other words, go Bayesian!

Resources

To get started with Bayesian deep learning I have found many useful and free resources online, some of which are listed below:

- Turing.jl tutorial on Bayesian deep learning in Julia.

- Various RStudio AI blog posts including this one and this one.

- TensorFlow blog post on regression with probabilistic layers.

- Kevin Murphy’s draft text book, now also available as print.

References

Bastounis, Alexander, Anders C Hansen, and Verner Vlačić. 2021. “The Mathematics of Adversarial Attacks in AI-Why Deep Learning Is Unstable Despite the Existence of Stable Neural Networks.” arXiv Preprint arXiv:2109.06098.

Daxberger, Erik, Agustinus Kristiadi, Alexander Immer, Runa Eschenhagen, Matthias Bauer, and Philipp Hennig. 2021. “Laplace Redux-Effortless Bayesian Deep Learning.” Advances in Neural Information Processing Systems 34.

Gal, Yarin, and Zoubin Ghahramani. 2016. “Dropout as a Bayesian Approximation: Representing Model Uncertainty in Deep Learning.” In International Conference on Machine Learning, 1050–59. PMLR.

Lakshminarayanan, Balaji, Alexander Pritzel, and Charles Blundell. 2016. “Simple and Scalable Predictive Uncertainty Estimation Using Deep Ensembles.” arXiv Preprint arXiv:1612.01474.

Murphy, Kevin P. 2022. Probabilistic Machine Learning: An Introduction. MIT Press.

Pearl, Judea, and Dana Mackenzie. 2018. The Book of Why: The New Science of Cause and Effect. Basic books.

Raghunathan, Aditi, Sang Michael Xie, Fanny Yang, John C Duchi, and Percy Liang. 2019. “Adversarial Training Can Hurt Generalization.” arXiv Preprint arXiv:1906.06032.

Slack, Dylan, Sophie Hilgard, Emily Jia, Sameer Singh, and Himabindu Lakkaraju. 2020. “Fooling Lime and Shap: Adversarial Attacks on Post Hoc Explanation Methods.” In Proceedings of the AAAI/ACM Conference on AI, Ethics, and Society, 180–86.

Wilson, Andrew Gordon. 2020. “The Case for Bayesian Deep Learning.” arXiv Preprint arXiv:2001.10995.

Originally published at https://www.paltmeyer.com on February 18, 2022.

Top comments (0)